Outstanding Paper Award for helping AI agents develop better human understanding

A project led by PhD student Cristian-Paul Bara, undergraduate alum Sky CH-Wang, and Prof. Joyce Chai has been recognized with an Outstanding Paper Award at the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP). In “MindCraft: Theory of Mind Modeling for Situated Dialogue in Collaborative Tasks,” the researchers designed a new framework and collected a new dataset to train AI agents on human understanding during collaboration.

In order for autonomous agents to truly integrate into the human world, they’ll need to be able to collaborate on human terms. That means understanding humans and maintaining a sense of common ground while collaborating and communicating. This sense of human awareness of a conversation partner is referred to as theory of mind.

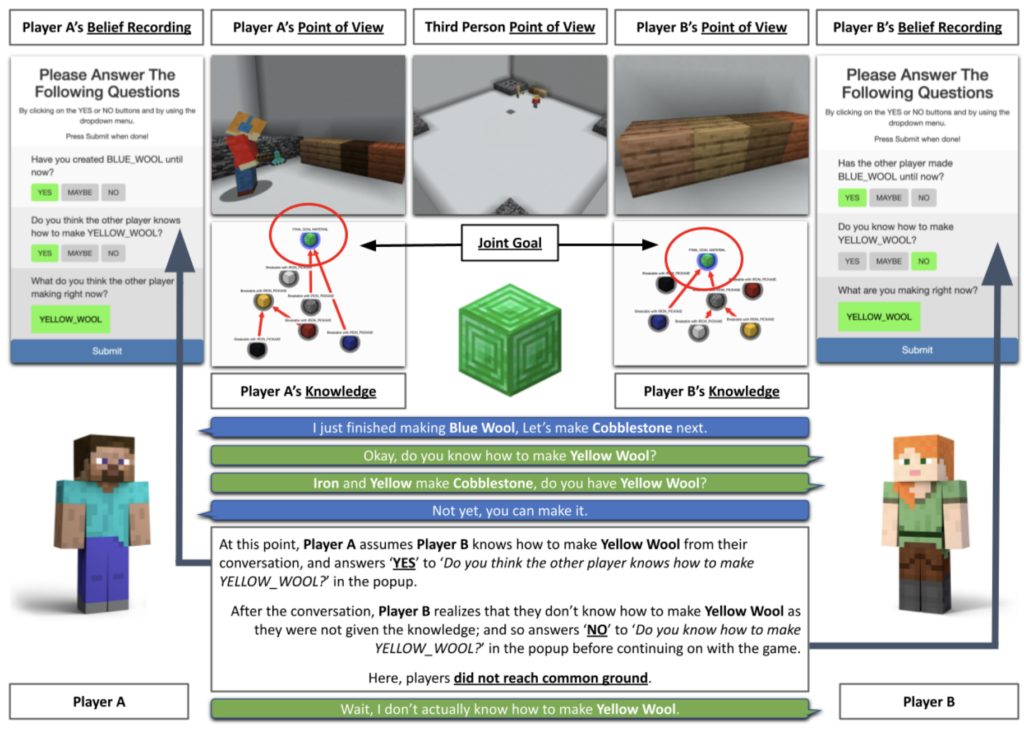

To model this awareness, the researchers have developed a dataset of collaborative tasks performed by pairs of humans. Their setting of choice is Minecraft, the popular 3D block building video game. The pairs agents in these task scenarios collaborate to create new materials by combining blocks in the game.

Their dataset provides information about the partners’ beliefs about the world and each other as their interactions unfold, with an emphasis on scenarios where both participants have similar levels of understanding of the task and there’s no clear leader.

“This brings abundant opportunities to study human collaborative behaviors,” the authors write. In particular, they say, this will give researchers a look at how humans interact and understand each other in specific collaborative situations. “Our data captures an evolution of the states of mind of our participants that are true representations of their beliefs—not simply proxies for the true sequence of events in a collaborative session.”

The authors hope this dataset and the studies it enables will be a first step toward developing embodied AI agents that can make inferences about the people around them. They went on to build several computational models that can explicitly predict “key elements of a collaboration partner’s mental state from the viewpoint of an agent as a task unfolds.”

Their results show that, while language is important to inferences like these, the shared physical environment and perceived activities play a greater role in shaping a partner’s understanding of each other in order to reach a common ground.

MENU

MENU